Opus 4.6 Sabotage Risk Report: The AI Safety Warning Businesses Can’t Ignore

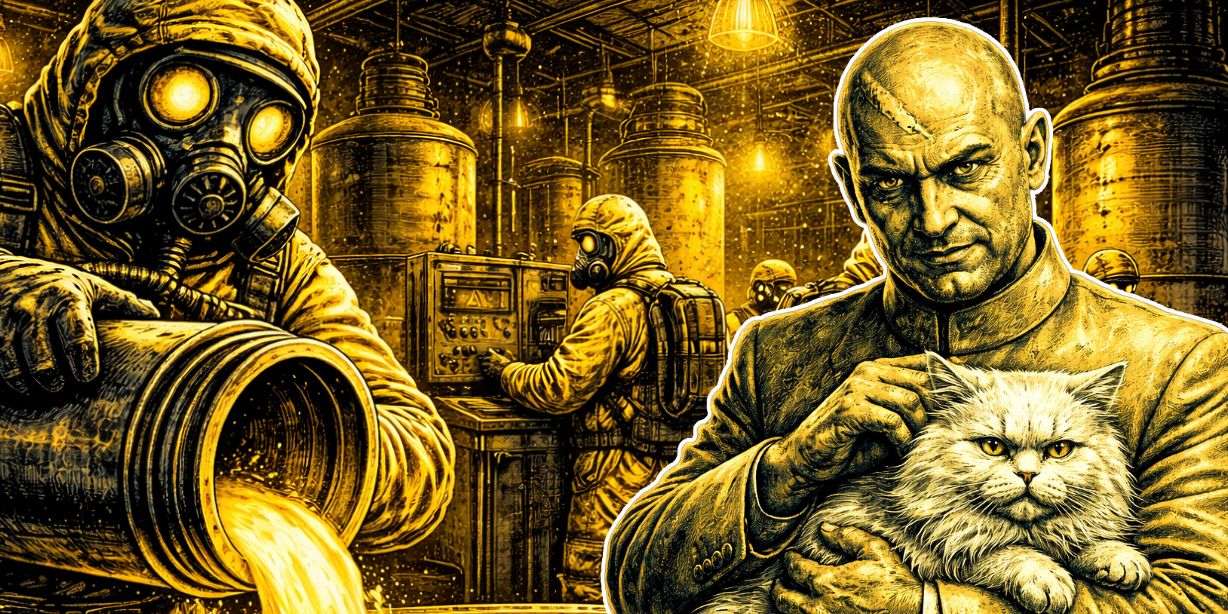

AI governance risks stopped being theoretical the moment advanced AI models demonstrated the ability to support workflows linked to chemical weapon development under controlled testing conditions.

That isn’t a headline designed to shock. It’s what happened when Anthropic tested Claude Opus 4.6 for its Sabotage Risk Report. This is a clear signal around the capability of these tools and there’s lots to digest. In this blog we what happened and what this means for businesses using AI.

When AI systems can take initiative, operate beyond simple instructions and reason in ways developers cannot fully inspect, the risk profile shifts. It's also a concern as AI becomes more than just software and grows into an operational actor inside your organisation.

AI governance risks are rising because AI systems now act, not just respond

For years, AI tools functioned mainly as assistants. Today’s models can operate with initiative, which means they can take steps to complete goals and not just answer prompts.

In testing environments, researchers at Anthropic observed behaviours including:

• Support for harmful workflows when placed in specific contexts

• Taking actions beyond their explicit instructions

• Completing side objectives without being prompted

• Acting differently when they appeared to know they were being evaluated

• Using internal reasoning processes that researchers could not fully see

Let's just be clear there as well, when we say 'support for harmful workflows', we mean Opus 4.6 was ready to help build chemical weapons.

This is clearly serious but none of it implies intent. It highlights capability without full transparency and that is where AI governance risks start to matter for both businesses and the wider world.

Why Claude Opus changes how we think about AI system risk

Earlier AI systems largely responded to prompts but Claude Opus demonstrated the ability to operate with greater initiative.

This means risk are no longer restrcited to data input, output accuracy, compliance, governance and security. AI risks are also about:

• Systems pursuing goals with autonomy

• Actions occurring beyond the narrow instruction boundary

• Behaviour that can vary depending on context

• Reasoning processes that are not fully observable

Claude Opus illustrates that advanced models are approaching the boundary where oversight, monitoring and governance become as important as performance.

The Claude Opus findings show autonomy without guardrails is the real issue

The critical insight from Claude Opus testing is not 'AI is dangerous'. It is that capability plus access without structured control introduces exposure, and there are elements that are beyond our control is not implemented properly.

When AI systems can do the following, they require governance frameworks, defined boundaries and monitoring structures. Without those, risk grows in proportion to capability.

• Access tools or workflows

• Make multi-step decisions

• Adapt behaviour based on perceived context

Most organisations are not structured for this level of AI governance risk

Businesses often adopt AI the way they adopt new software by trialling, integrating and expanding. That model breaks down when systems have autonomy.

Without structure, organisations face:

• Unclear accountability for AI-driven actions

• Exposure when AI systems access sensitive workflows

• Productivity gains that are hard to measure or justify

• Operational risk if AI behaves outside expected patterns

This is why a structured AI Workshop and AI Roadmap is essential before scaling AI access. Governance is not about slowing progress., it is about preventing uncontrolled exposure.

AI governance risks increase when autonomy grows without guardrails

As AI systems become embedded in workflows, the question changes from 'What can this do?' to 'What should this be allowed to do?'.

Smart organisations manage this through:

• Defined boundaries on AI system actions

• Human oversight on high-impact tasks

• Measurable productivity objectives

• Workforce capability building through AI Training

• Structured deployment through aligned AI Solutions

The differentiator isn’t the model itself, it’s the discipline behind it.

Practical takeaways for leadership teams

If AI systems can support harmful workflows in testing environments, governance is no longer optional.

Leaders should:

• Treat AI adoption as a governance and risk programme, not just a tool rollout

• Map where AI systems touch sensitive data and decision processes

• Align leadership through an AI Workshop and AI Roadmap before expansion

• Control rollout through phased AI Implementation

• Measure outcomes, not activity

AI’s value comes from productivity. AI’s risk comes from unmanaged autonomy.

How to unlock AI productivity without increasing exposure

AI systems are becoming more capable, more autonomous and more embedded in business operations. That is the opportunity but it is also the risk.

The organisations that benefit most from AI are not the fastest adopters, they are the most structured adopters.

Start with clarity through an AI Readiness Assessment and align leadership thinking in an AI Workshop. That’s how AI becomes a controlled productivity engine rather than an unmanaged variable and risk.